It’s not enough to just test our code's happy path (in other words, the error-free path we hope our users will take).

To be really confident our code can’t be abused, either accidentally or on purpose, we must actively attack it to try and find ways of breaking it. If we don’t do this, someone else will, and they probably won’t be as friendly.

I like to call this defensive testing, because it is a way of provoking defensive programming: preemptively designing our code to be resilient in the face of errors and attacks. We want to ensure that our code can gracefully handle invalid or unexpected user input and behavior.

In this article, we'll look at fuzz testing to efficiently exercise our code in many possible ways with minimal effort. Using fuzz testing, we will generate a variety of inputs to throw out our REST API and exercise our code. We aim to preemptively find edge cases and fix errors in our code before production.

Code Examples

Two working example projects are available on GitHub to demonstrate the ideas presented in this article. Clone the code repository using Git or download and unpack the zip file.

You’ll need Node.js installed to run the example code. Please try out each example for yourself to follow along:

Generating Data From a JSON Schema

There are many ways we can generate data for fuzz testing, and a bunch of packages available on npm, such as Chance and Fakerjs. But I want to generate data from a JSON schema because that is a convenient way to represent a data format. Also, the OpenAPI spec (often used to describe and document REST APIs) contains JSON schemas for HTTP requests and responses. It would be very convenient to both describe a REST API with an OpenAPI spec and be able to automatically generate tests.

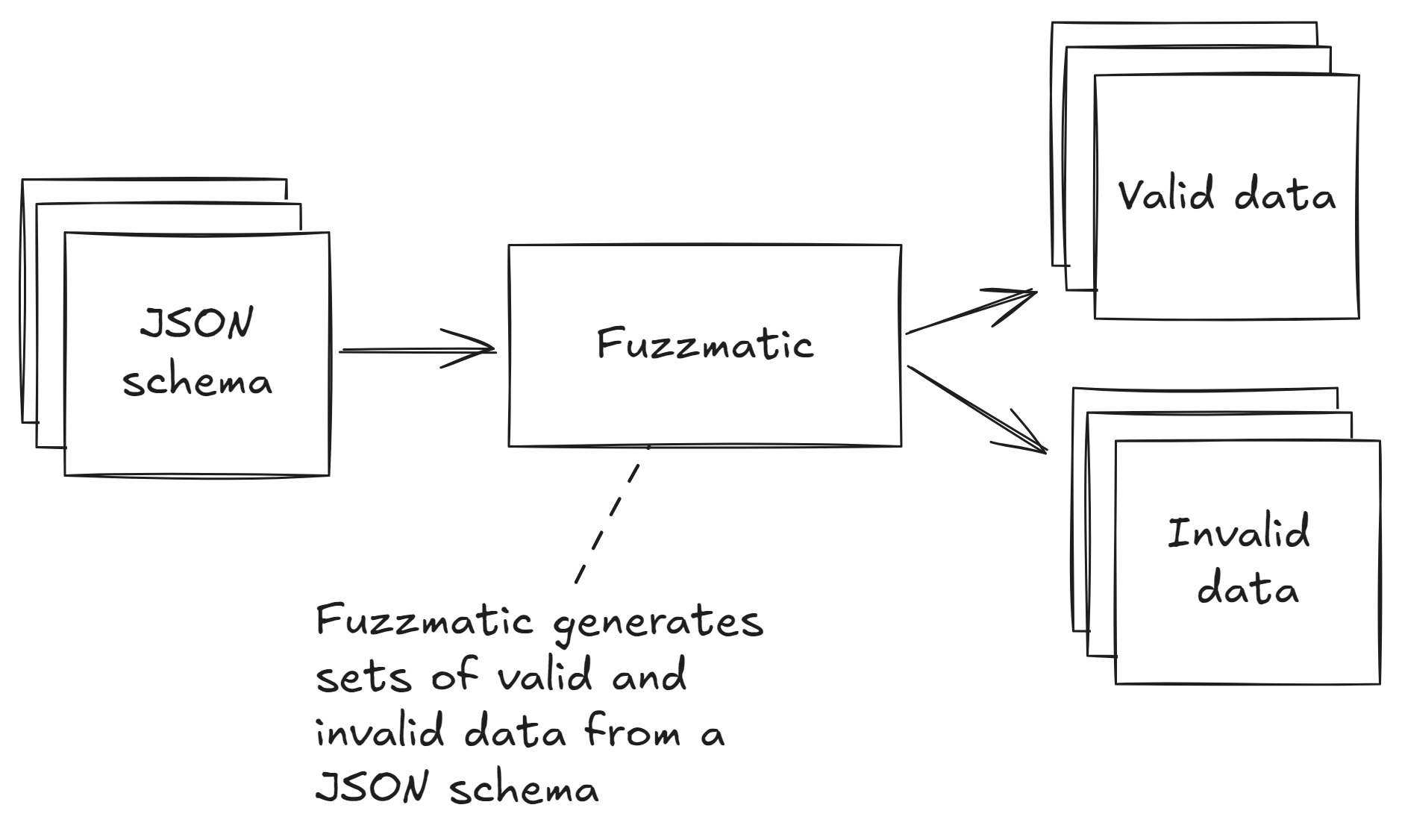

I couldn’t find any open-source code libraries that could generate data from a JSON schema (although there are some proprietary or paid options that I don’t want to use). So I created my own library, Fuzzmatic, that takes a JSON schema as input and generates many combinations of valid data that matches the schema (and invalid data that does not match the schema). Here's an illustration of how this works:

Figure 1: Fuzzmatic generates sets of valid and invalid data from a JSON schema.

As an example, consider the JSON schema below (in YAML format for readability). This describes the payload for a REST API request that creates a blog post (modeled after the JSON Placeholder example REST API). The schema describes the required properties for the JSON body of an HTTP request to the /posts endpoint of the REST API.

title: POST /posts payload

type: object

required:

- userId

- title

- body

properties:

userId:

type: number

minimum: 1

title:

type: string

minLength: 1

body:

type: string

minLength: 1

additionalProperties: falseWe can use Fuzzmatic from the command line to generate a data set from the JSON schema. First we should install it:

npm install -g fuzzmaticNow we can run it against the JSON schema:

fuzzmatic my-json-schema.yamlFuzzmatic generates data to the terminal in JSON format. You can see an abbreviated example of the data below.

{

"valid": [ // Examples of valid data.

{

"userId": 1,

"title": "a",

"body": "a"

},

{

"userId": 10,

"title": "a",

"body": "a"

},

// --snip--

],

"invalid": [ // Examples of invalid data.

{},

{

"userId": 0,

"title": "a",

"body": "a"

},

{

"userId": -100,

"title": "a",

"body": "a"

},

// --snip--

null,

42,

"a",

true

]

}We can use each entry in the valid and invalid sets to create tests against our REST API. In this example we might make HTTP requests using each entry in the valid set and then expect an HTTP status code of 200. In the case of invalid entries, we might expect an HTTP status code of 400. By throwing many combinations of inputs against our REST API we can be confident that it handles many situations, valid and invalid, without problems.

Testing REST APIs with Generated Data

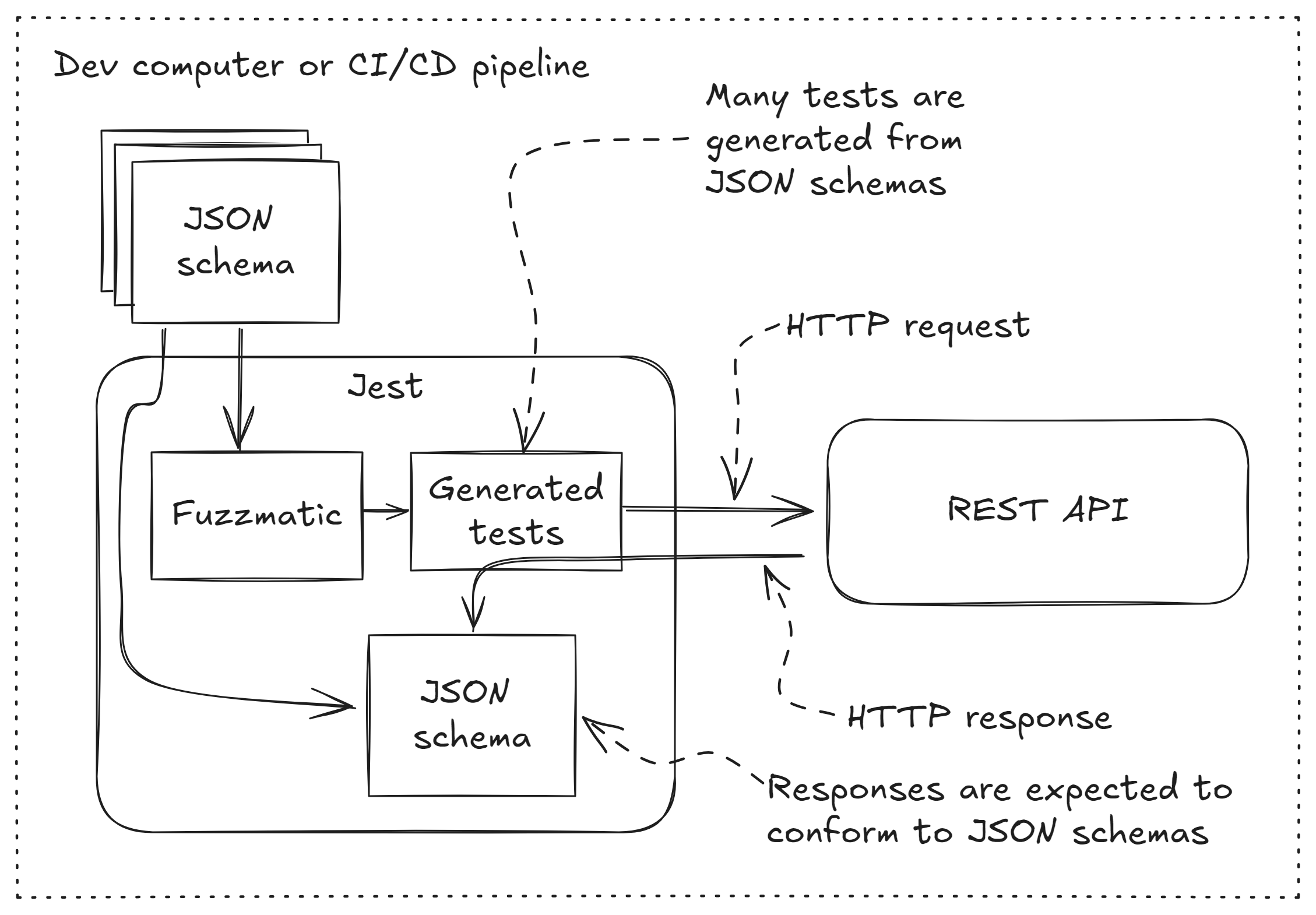

Now that we can generate data from a JSON schema, let’s use that generated data to create a series of tests for our REST API. We’ll use Fuzzmatic to create each HTTP request's body and Jest to generate a test suite using Jest’s test.each function. Here's how it works:

Figure 2: Fuzz testing a REST API with Fuzzmatic and Jest.

You can try it out for yourself by getting a local copy of the code.

To get set up, first open a terminal. Then get a local copy of code, change into the directory and install project dependencies:

git clone https://github.com/ashleydavis/fuzz-testing-a-rest-api

cd fuzz-testing-a-rest-api

npm installNow run the tests:

npm testYou should see a number of passing tests that exercise the HTTP endpoint to create a blog post in our example REST API. The REST API uses a mock version of MongoDB during testing so you don’t need to have a local database. We explored mocking external dependencies in my previous post Streamlined Contract Testing in Node.js: A Simple and Achievable Approach.

The tests that we generated are created from the test specification you can see here. This details the HTTP endpoints we’d like to test (only one in this example, to keep it simple). It also contains the JSON schema for the body of the HTTP request and its response. Each endpoint's spec details the status and response to expect for valid and invalid data.

schema:

definitions:

CreatePostPayload: # Defines the payload of the HTTP request.

title: POST /posts payload

type: object

required:

- userId

- title

- body

properties:

userId:

type: number

minimum: 1

title:

type: string

minLength: 1

body:

type: string

minLength: 1

additionalProperties: false

CreatePostResponse: # Defines the HTTP response.

title: POST /posts response

type: object

required:

- _id

properties:

_id:

type: string

additionalProperties: false

specs:

- title: Adds a new blog post # Test spec for the endpoint that creates a blog post.

description: Adds a new blog post to the REST API.

fixture: many-posts

method: post

url: /posts

headers:

Content-Type: application/json; charset=utf-8

body:

$ref: "#/schema/definitions/CreatePostPayload"

expected: # What we expect depending on whether the request succeeds or fails.

okStatus: 201

errorStatus: 400

headers:

Content-Type: application/json; charset=utf-8

okBody:

$ref: "#/schema/definitions/CreatePostResponse"Next, you can see how we use Fuzzmatic’s generateData function to expand the spec for each HTTP endpoint to many specs including all the valid and invalid payloads that can be sent to the endpoint. We use test.each from the expanded list of specs to create a suite of Jest tests that exercises the endpoint with every combination of valid and invalid data.

We use Axios to make each HTTP request and subsequently check that the response matches our expectations, depending on whether it should have succeeded or failed. To learn more about generating tests, contract testing, and seeding the database with data fixtures, please refer back to Streamlined Contract Testing in Node.js.

// --snip--

describe("Fuzz tests from JSON Schema", () => {

// Loads the test spec.

const testSpec = resolveRefs(yaml.parse(fs.readFileSync(`${__dirname}/test-spec.yaml`, 'utf-8')));

// Expands the test spec by generating valid and invalid sets of data.

const fuzzedSpecs = testSpec.specs.flatMap(spec => {

const data = generateData(spec.body);

const validSpecs = data.valid

.filter(validBody => isObject(validBody)) // Must be an object for Axios.

.map((validBody, index) => {

return {

...spec,

title: `${spec.title} - valid #${index+1}`,

body: validBody,

expected: {

status: spec.expected.okStatus,

body: spec.expected.okBody,

},

};

});

const invalidSpecs = data.invalid

.filter(invalidBody => isObject(invalidBody)) // Must be an object for Axios.

.map((invalidBody, index) => {

return {

...spec,

title: `${spec.title} - invalid #${index+1}`,

body: invalidBody,

expected: {

status: spec.expected.errorStatus,

body: spec.expected.errorBody,

},

};

});

return [...validSpecs, ...invalidSpecs];

});

// --snip--

// Generates the Jest test suite.

test.each(fuzzedSpecs)(`$title`, async spec => {

// --snip--

const response = await axios({ // Makes the HTTP request.

method: spec.method,

url: spec.url,

baseURL,

data: spec.body,

validateStatus: () => true,

});

if (spec.expected.status) { // Match status

expect(response.status).toEqual(spec.expected.status);

}

if (spec.expected.headers) { // Match headers.

for ([headerName, expectedValue] of Object.entries(spec.expected.headers)) {

const actualValue = response.headers[headerName.toLowerCase()]

expect(actualValue).toEqual(expectedValue);

}

}

if (spec.expected.body) { // Match response.

expectMatchesSchema(response.data, spec.expected.body);

}

});

});Generating Tests From a Swagger/OpenAPI Spec

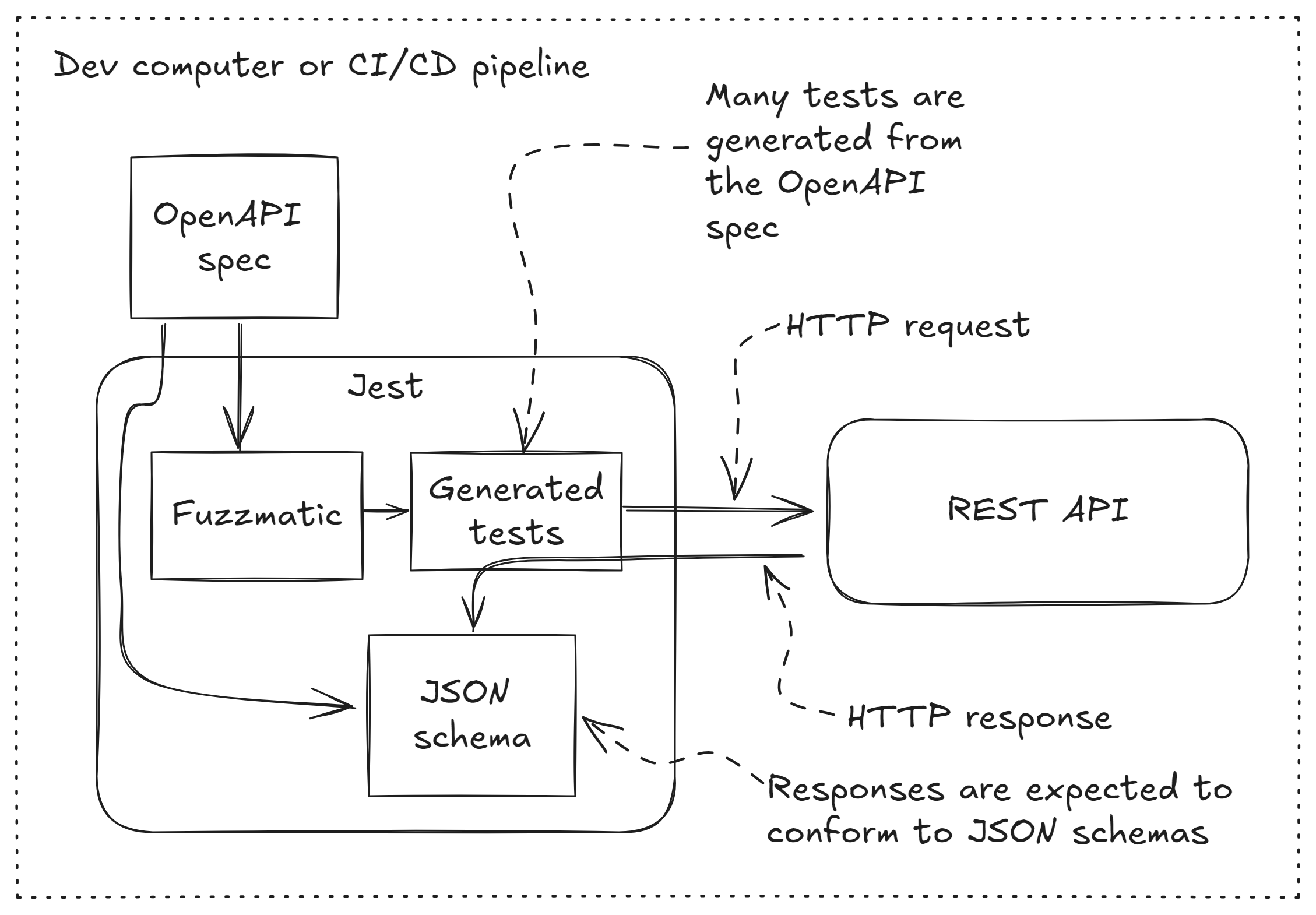

Now we get to what I really wanted right from the start: The ability to generate tests from an OpenAPI spec. Let's say we already have an OpenAPI spec for our REST API (as many of us do to document our REST API). With a little more work, we can generate our test suite from the openapi.yaml file that we already have in the root of our code repository. You can see how this looks:

Again, please try it out for yourself using the example project.

To get set up, first open a terminal. Then get a local copy of your code, change into the directory, and install project dependencies:

git clone https://github.com/ashleydavis/fuzz-testing-from-open-api

cd fuzz-testing-from-open-api

npm installNow run the tests:

npm testYou should see a bunch of passing tests. This time, though, the tests have been created from the OpenAPI spec. This is a standard sort of OpenAPI spec, but with the addition of the custom properties x-okStatus and x-errStatus. Other OpenAPI tools ignore these properties, but we use them so our generated tests can know what HTTP status code to expect when the HTTP endpoint being tested succeeds or fails.

openapi: 3.0.0

info:

version: 1.0.0

title: JSON Placeholder API

description: See https://jsonplaceholder.typicode.com/

paths:

/posts:

post: # Defines the HTTP POST endpoint /posts

description: Adds a new blog post

requestBody:

required: true

content:

"application/json":

schema:

$ref: "#/components/schemas/CreatePostPayload"

responses:

"201":

description: Post created

content:

"application/json":

schema:

$ref: "#/components/schemas/CreatePostResponse"

"400":

description: Failed to create post

x-okStatus: 201 # The error code expected for valid data.

x-errStatus: 400 # The error code expected for invalid data.

components:

schemas:

CreatePostPayload: # Defines the payload for HTTP requests.

title: POST /posts payload

type: object

required:

- userId

- title

- body

properties:

userId:

type: number

minimum: 1

title:

type: string

minLength: 1

body:

type: string

minLength: 1

additionalProperties: false

CreatePostResponse: # Defines the HTTP response.

title: POST /posts response

type: object

required:

- _id

properties:

_id:

type: string

additionalProperties: falseOur testing code looks a little bit different now because we must generate our test specs from the OpenAPI spec, as shown below:

// --snip--

describe("Fuzz tests from OpenAPI", () => {

// Loads the OpenAPI spec.

const openApiDef = resolveRefs(yaml.parse(fs.readFileSync(`./openapi.yaml`, 'utf-8')));

// Generates test specs from the OpenAPI spec.

const fuzzedSpecs = Object.entries(openApiDef.paths).flatMap(([path, pathSpec]) => {

return Object.entries(pathSpec).flatMap(([method, methodSpec]) => {

const bodySchema = methodSpec.requestBody?.content?.["application/json"]?.schema;

if (!bodySchema) {

return [];

}

// How does the endpoint handle success?

const okStatus = methodSpec["x-okStatus"];

const okBody = methodSpec.responses?.[okStatus.toString()]?.content?.["application/json"]?.schema;

// How does the endpoint handle failure?

const errorStatus = methodSpec["x-errStatus"];

const errorBody = methodSpec.responses?.[errorStatus.toString()]?.content?.["application/json"]?.schema;

const data = generateData(bodySchema);

const specTitle = methodSpec.description || `${method} ${path}`;

const validSpecs = data.valid

.filter(validBody => isObject(validBody))

.map((validBody, index) => {

return {

title: `${specTitle} - valid #${index+1}`,

fixture: "many-posts",

method,

url: path,

headers: {

"Content-Type": "application/json",

},

body: validBody,

expected: {

status: okStatus,

body: okBody,

},

};

});

const invalidSpecs = data.invalid

.filter(invalidBody => isObject(invalidBody))

.map((invalidBody, index) => {

return {

title: `${specTitle} - invalid #${index+1}`,

fixture: "many-posts",

method,

url: path,

headers: {

"Content-Type": "application/json",

},

body: invalidBody,

expected: {

status: errorStatus,

body: errorBody,

},

};

});

return [...validSpecs, ...invalidSpecs];

});

});

// --snip--

// Generates the Jest test suite:

test.each(fuzzedSpecs)(`$title`, async spec => {

//

// Testing is the same as before.

// 1. Make the HTTP request.

// 2. Check the status, headers and response match expectations.

//

});

});Again, we use Fuzzmatic to generate sets of valid and invalid data to send to our HTTP endpoint. This time, though, we use the custom properties x-okStatus and x-errStatus to figure out which responses we should match with valid and invalid data. In this way, we make sure our HTTP endpoint has the correct response depending on the data we pass to it.

Debugging Generated Tests

We’ll likely find numerous failures when we first run our generated tests. For example, when writing the code for this article, I noticed that I had no validation on any of the inputs when creating a blog post through the REST API. But you might also discover bugs in your code, such as valid data configurations that should yield a HTTP status code 200, but instead come back with a 400 or 500.

When we find problems we must be able to debug individual tests, which can be tricky considering that the data we are using to test is generated and isn’t actually stored anywhere.

I didn’t include it in the code listing above, but in the actual code here and here, you can see uncomment code to log out generated data and the final list of generated test specs. This is really useful when you have a failing test: you can see the exact generated inputs and what the test spec explicitly looks like for the failing test.

For example, here you can see a snippet of the generated test specs (including one spec for valid and one for invalid data):

[

{

"title": "Adds a new blog post - valid #1",

"description": "Adds a new blog post to the REST API.",

"fixture": "many-posts",

"method": "post",

"url": "/posts",

"headers": {

"Content-Type": "application/json; charset=utf-8"

},

"body": {

"userId": 1,

"title": "a",

"body": "a"

},

"expected": {

"status": 201,

"body": {

"title": "POST /posts response",

"type": "object",

"required": [

"_id"

],

"properties": {

"_id": {

"type": "string"

}

},

"additionalProperties": false

}

}

},

// --snip--

{

"title": "Adds a new blog post - invalid #1",

"description": "Adds a new blog post to the REST API.",

"fixture": "many-posts",

"method": "post",

"url": "/posts",

"headers": {

"Content-Type": "application/json; charset=utf-8"

},

"body": {},

"expected": {

"status": 400

}

},

// --snip--

]When you have many test failures, it can be useful to focus on one at a time so as not to be overwhelmed. We can do this by using Jest's -t argument and specifying the exact test we’d like to run. We can use this with npm test as follows:

npm test -- -t "Adds a new blog post - invalid #1"Try it out for yourself.

Adding Fuzz Tests to your CI/CD Pipeline in Node.js

Given that we are using a mocked version of our MongoDB database, it’s easy to add our automated fuzz tests to our CD/CD pipeline without relying on an external service. We can do this on any CI/CD platform by running the standard Node.js commands to install and test our project:

npm ci

npm testHere's an example CI pipeline for GitHub Actions:

name: Automated fuzz tests

on:

push:

branches: ["main"]

pull_request:

branches: ["main"]

jobs:

contract-tests:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: actions/setup-node@v3 # Installs Node.js.

with:

node-version: 20

- run: npm ci # Installs project dependencies.

- run: npm test # Runs the automated fuzz tests.And that concludes our whistle-stop tour of fuzz testing!

Wrapping Up

In this article, we have seen how we can generate many valid and invalid combinations of data for testing REST APIs. This helps us increase code coverage, root out problems, and have more confidence that our code is production-worthy.

Fuzz testing is an efficient way to get more tests with less effort. It helps us catch more errors early before they become a problem and expensive to fix.

We can easily put fuzz testing on automatic by adding it to our CI/CD pipeline. This gives us an automatic level of coverage and protection while we edit and evolve our code.

Happy testing!